How Evaluations are Done at NRCan

Purpose

To provide information on how evaluations are planned, costed and conducted once they are on the five-year departmental Evaluation Plan.

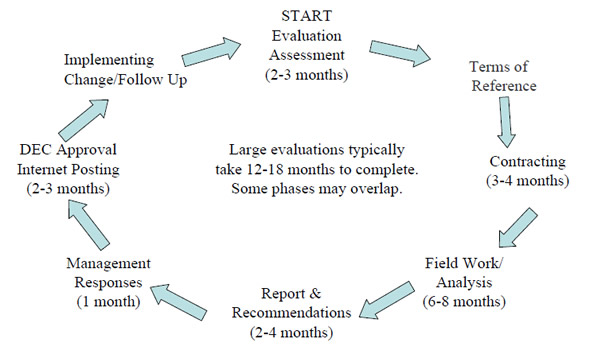

The Cycle for Evaluation Reports

[text version - The Cycle for Evaluation Reports]

Evaluation Assessment

- Evaluation Assessment Evaluation Assessments are prepared based on:

- program profiles (e.g., objectives; logic models; organization and governance; expenditures; an assessment performance information);

- the calibration of the evaluation based on risk criteria, as part of overall 5-year Plan

- (i.e., program renewal; materiality; context and need; visibility; management practices and structure; policy and delivery complexity; performance measurement; and past evaluation and audit findings);

- the evaluation questions (TBS Policy identifies the generic questions and these may be supplemented by others identified by Program);

- an initial plan on how to conduct the evaluation (i.e., methods to be used and levels of effort; contracting strategy; timelines; and estimated costs).

- Evaluation Assessments are developed based on experience and professional judgement.

Scope/Costing Considerations

-

Based on evaluation assessment, the scope and costing are developed, taking into account:

- TBS policy’s minimum standards and required "multiple lines of evidence" (e.g., documents; interviews; administrative data; survey results; case studies; focus groups).

- SED’s internal risk assessment based on additional methods support quality, rigour & richness of the evaluation.

- Sufficient level of evidence to be collected to allow conclusions to be drawn on each program (especially G&C programs).

- There are many risk-factors (previous page) and considerations, e.g.:

- number of programs, availability of data, past evaluations;

- enough interviewees to ensure the full story;

- in-person interviews are always better than over the phone surveys provide less in-depth information from many people;

- case studies provide in-depth knowledge of one project and how projects contribute to the achievement of program objectives.

- Need for contracting

Contracting

- Contractors may be used as part of the evaluation team, based on:

- The need for subject matter expertise;

- Internal capacity and timing considerations;

- and The need for third party (non-NRCan) involvement

- Value for money (optimal mix of internal and external resources) is determined through:

- The availability of internal staff to meet coverage requirements;

- and The competitive procurement process designed to achieve the best value for the Crown

- Evaluation has a Supply Arrangement with nine evaluation firms* based on a competitive process to qualify the firm and contractors.

- Based on the TOR, an RFP is sent to a minimum of 4 firms.

- Contracting takes at least 3 months from SOW to signed contract.

- Responses to the RFPs, provide suggestions for altering the SOW and what the company is prepared to do for the available budget.

- If a subject matter expert is required, Evaluation will contract directly with the expert to be part of the team (e.g., nuclear expertise).

* KPMG; Science Metrix; PMN; PRA; Goss Gilroy;TDV Global; Boulton; Baastel; and CPM.

Terms of Reference

- The TOR are derived from the Evaluation Assessment and scoping/costing analysis.

- TOR must be approved by the Evaluation Committee.

- TOR include:

- overview of the entity being evaluated;

- evaluation issues and questions,

- methods (e.g., interviews; surveys) to be used;

- contracting approach (i.e., in house; contracted out; or hybrid);

- timelines;

- resources required (e.g., estimated contract cost); and

- governance (e.g., a working group of program and evaluation officials).

Field Work

- Evaluators work on several projects simultaneously.

- Field work presents many challenges:

- field work requires the input of programs;

- contact information for surveys & interviewees not always readily available;

- unplanned delays are very difficult for contractors to manage;

- work around seasonal cycles: e.g. interviewees not available during the summer; contractors and programs are extremely busy prior to March 31.

- Technical reports are usually produced for each method (e.g., interviews). All information is analyzed by evaluation question.

- Preliminary findings are presented to programs to confirm findings and seek any additional information.

Report and Recommendations

- Preliminary findings serve to highlight and validate key issues from collected evidence, and provide basis for the outline for the report.

- Based on the findings, the report is written and the recommendations are developed.

- The draft report is vetted with the Program and discussions take place on the recommendations (do they flow from findings; do they make sense; can they be implemented).

- Report length is influenced by complexity of subject (e.g., one program or many) and need to present evidence.

- Management responses and action plan are drafted by the program and ADM approval is sought.

Approval & Posting of Reports

- The DEC advises the Deputy Minister (DM) on the report and management responses action plans. The DM must approve.

- DM-approved reports are provided to TBS and may be examined for quality during the annual MAF assessment of evaluation.

- The Evaluation Policy requires, complete, approved evaluation reports along with management responses and action plans to be posted in both official languages in a timely manner (i.e., 90 days).

- Reports are reviewed by ATIP and Communications.

- If required, Communications and the program prepare media lines.

Management Responses & Follow Up

- ADMs are responsible for implementing the action plans for each recommendation.

- The Evaluation Division follows up with Sectors on the implementation of action plans and updates DEC.

- DEC decides if the management responses have been satisfactorily implemented and when the file can be closed.

- The activity/program is re-considered as part of Evaluation Plan in the following evaluation cycle.

What Does Evaluation Add?

- Accountability (posted reports and input into DPRs)

- Strategic input into program decision-making and development (e.g., assistance in developing logic models and performance measurement strategies) including information for MCs and TB Submissions

- Neutral perspectives and additional data collection methods

- Evidence for internal reviews (Strategic Reviews/SOR etc.)

- Corrective change, starting before the evaluation is over

- Follow up on recommendations

Conclusions

- We hope this presentation has provided a better understanding of how evaluations are planned, costed and conducted.

- Questions? send to dvinette@nrcan.gc.ca

Page details

- Date modified: